[Paper Review] KG-GPT: A General Framework for Reasoning on Knoledge Graphs Using Large Language Models

KG-GPT: A General Framework for Reasoning on Knowledge Graphs Using Large Language Models”, EMNLP 2023 findings. All figures are captured from the paper.

TL;DR

KG-GPT is a multi-task framework that leverages LLMs on KGs. The tasks include KB-based fact verification and KGQA benchmarks. It has three steps, breaking up sentences, retrieve relevant subgraph, and derive logical conclusions.

Backgrounds

KB-based fact verification

A task to determine whether a sentence is supported or refuted.

The required ability is to consult a KB containing relevant information and to use that information to determine whether the statement is true or false.

Sentence: Barack Obama was born in Hawaii.

Fact1: Barack Obama was born on August 4, 1961.

Fact2: Hawaii became a state in the United States on August 21, 1959.

We can conclude that the sentence is true.

What is KGQA?

A task to answer the natural language question by generating query or reasoning over KG.

Question: What does jamaican people speak?

Answer: Jamaican Engilsh, m.01234y

KG-GPT

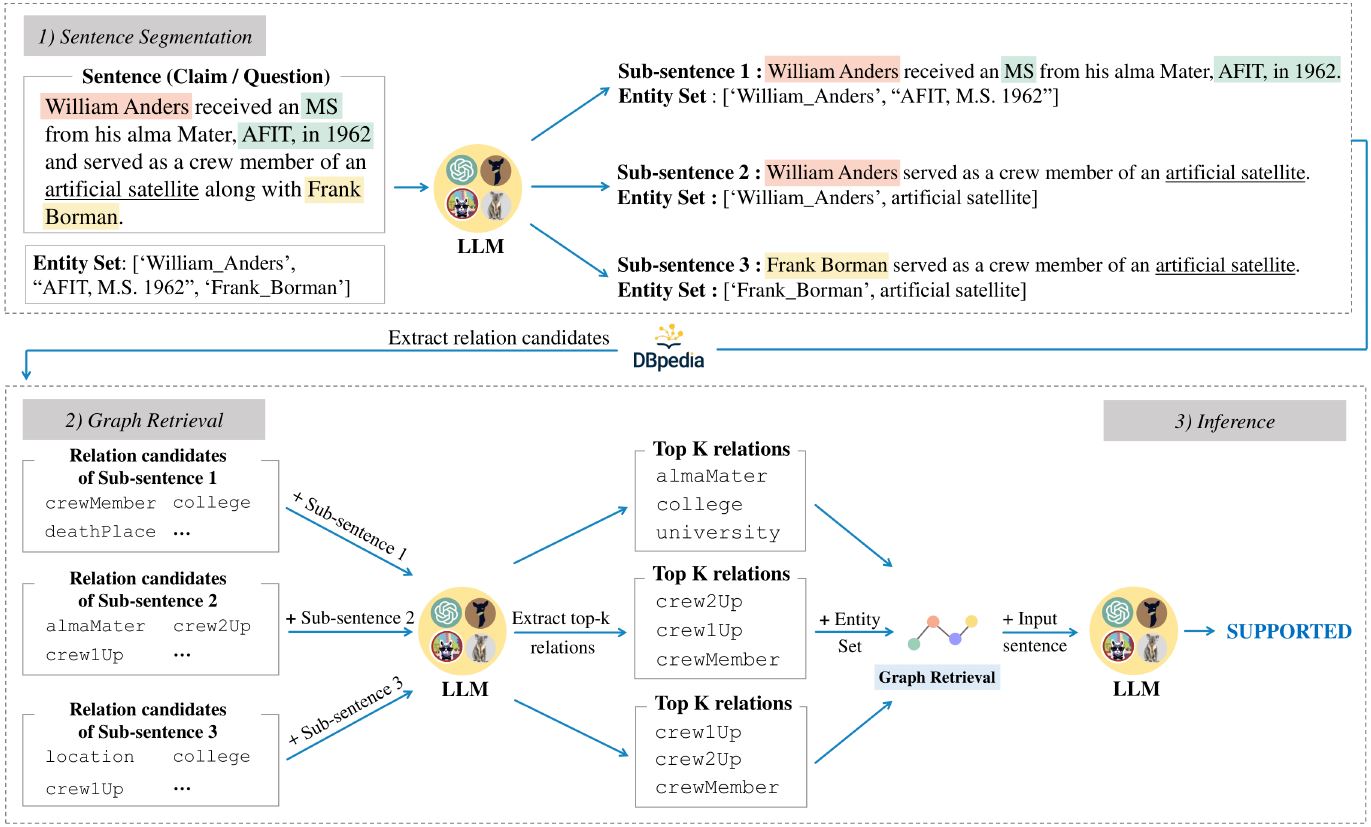

KG-GPT has three stages: Sentence Segmentation, Graph Retrieval, and Inference.

1) Sentence Segmentation

To conduct multi-hop reasoning, it utilizes Divide-and-Conquer, i.e., it breaks down sentence into sub-sentences which includes a single relation.

2) Graph Retrieval

To draw an accurate answer, it is important to find question-related subgraph. To do so, it detects all relations connected to entities in the given sentence. Then, it feeds the sub-sentence and relation to LLM to find the top-K relations. The final graph is constructed from all triples containing those top-k relations.

3) Inference

Feed the original sentence and the constructed graph to LLM to derive a logical conclusion. In fact verification, the determination of whether the given sentence is supported or not relies upon the retrieved graph, and in question answering, LLM identifies the most probable entity in the retrieved graph.

Experiments

Dataset

- FactKG: A dataset for fact verification based on DBpedia. Claims are categorized as Supported or Refuted.

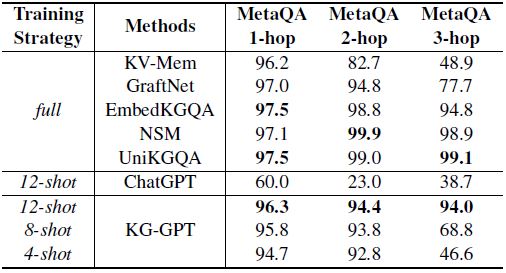

- MetaQA: QA in the field of movies.

Baselines

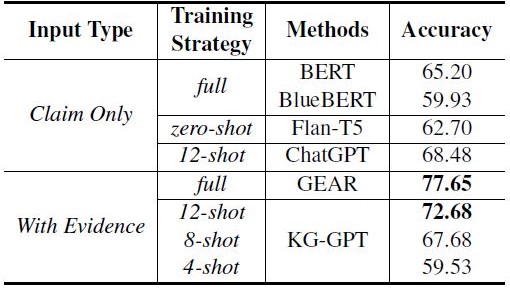

On FactKG, there are two distinct baseline categories: 1) Claim Only which only sees input and predicts label, and 2) With Evidence which has a graph retriever and a sentences verifier. Please refer to the figures below to check the entire baselines used in the experiments.

Short Results

On FACT-KG, the accuracy of KG-GPT is notable. However, the accuracy is still behind GEAR’s, implying KG-GPT has difficulty on making results using few-shot example. On MetaQA, KG-GPT shows competitive accuracy to fully trained models.

Conclusion

- KG-GPT is a multi-task framework composed of three stages, and the experiment shows its effectiveness.

- It successfully combines unstructured text and structured graph in the LLM’s context.

References

[1] Kim, Jiho, et al. “KG-GPT: A General Framework for Reasoning on Knowledge Graphs Using Large Language Models.” arXiv preprint arXiv:2310.11220 (2023).

Leave a comment